The Symbiosis of AI and Science: Unraveling the Potentials of Large Language Models

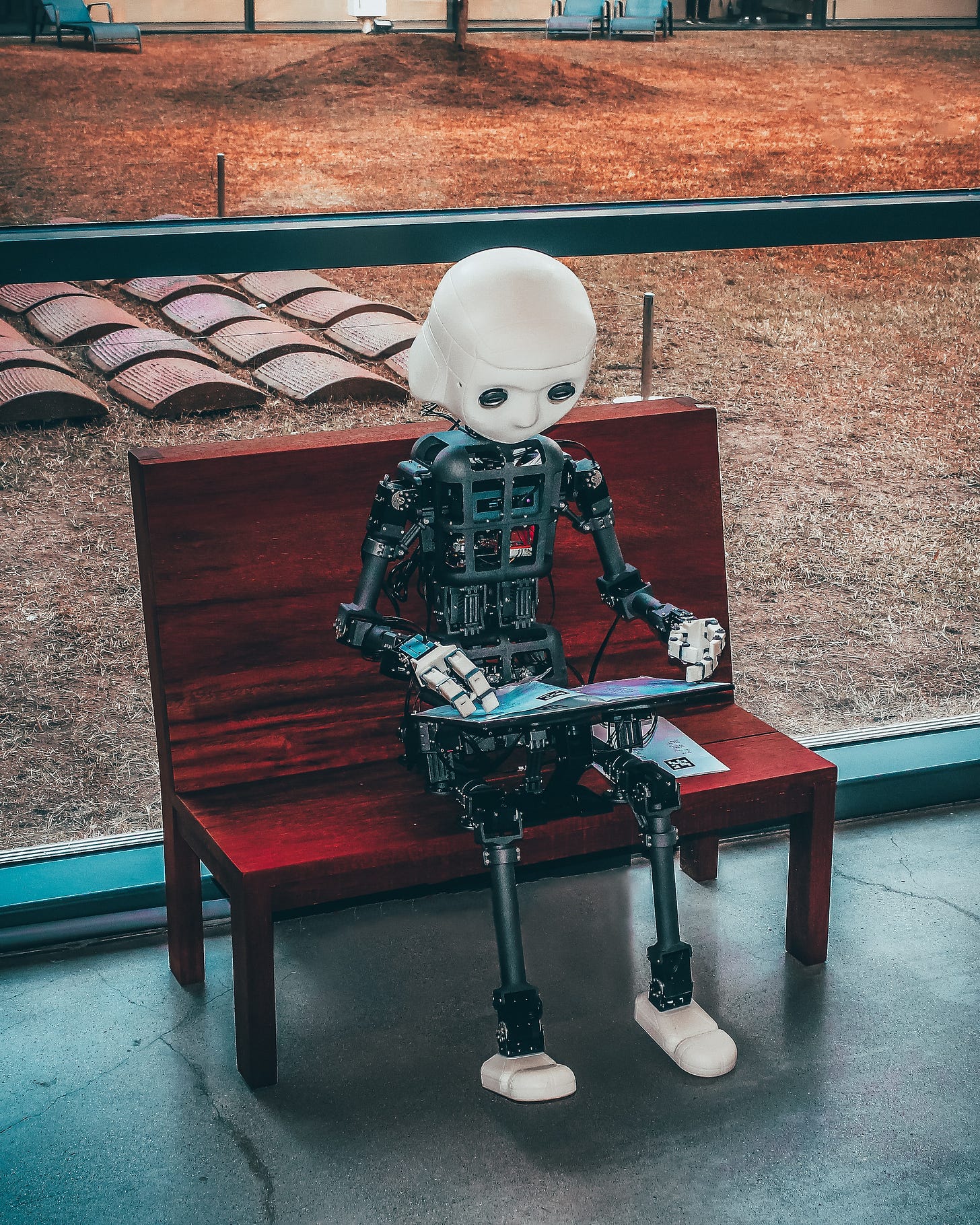

We might finally get the promised flying cars

Photo by Andrea De Santis on Unsplash

In the ceaseless quest for knowledge, humanity has always sought more effective ways to navigate the vast, labyrinthine landscape of understanding. Over time, our tools have evolved, from the primitive to the intricate, mirroring our own intellectual evolution. Today, standing on the brink of a new era, we find ourselves at an intersection where artificial intelligence collides and merges with the pursuit of science. At the center of this converging point, Large Language Models (LLMs) stand poised as transformative agents, promising to reconfigure our interactions with the vast domain of human knowledge.

As ChatGPT rose to prominence, many tailored tools have been created by the community in adopting GPT and other LLMs for scientific research tasks. Here, we would like to systematically explores the transformative potential and challenges of LLMs in the context of scientific research. We begin by unveiling the concept of LLMs as a two-way interface for human knowledge, a dynamic medium that fosters a symbiotic relationship between the human intellect and the encyclopedic domain of scientific literature. By acting as both a distiller and facilitator of knowledge, LLMs hold the potential to drastically reduce barriers to entry for individuals delving into new scientific fields, while simultaneously refining the process of knowledge contribution.

Next, we delve into the need for continuously evaluating and amplifying LLMs' proficiency in dealing with scientific queries. Drawing parallels to the pioneering works of intellectual giants like Hilbert, Godel, and Turing, we explore the intriguing intersection of formal proofs and natural language. Here, we uncover the powerful, albeit complex, potential of LLMs to grapple with scientific concepts, pushing us to relentlessly refine their ability to comprehend, interpret, and eventually contribute to mankind's domain of scientific knowledge.

In the final section, we journey into the realm of LLMs' "Chain of Thoughts," exploring their capacity to simulate the scientific method and mimic a scientist's approach to problem-solving. Despite their lack of consciousness, we posit that LLMs' 'thought simulation' opens exciting avenues for hypothesis generation, experimental design, and critical review.

It is invigorating to postulate a future where LLMs become an integral part of scientific research. As we stand on the precipice of this new era, we are tasked with not only understanding these transformative potentials but also with guiding this symbiosis of artificial and human intelligence. In doing so, we contribute to shaping an exciting future where our collective ability to investigate, understand, and expand the frontiers of knowledge is unimaginably amplified.

I. LLMs as a Human-Knowledge Interface

Humanity's relentless pursuit of enhanced knowledge acquisition, storage, and dissemination methods has charted a course from primitive inscriptions to the sophisticated digital databases of today. In this relentless march of progress, Large Language Models (LLMs) like GPT-4 embody the vanguard, boasting the potential to revolutionize our interaction with scientific knowledge.

At the heart of this revolution lies the concept of LLM as a two-way interface, a dynamic medium through which humans can both extract and contribute knowledge. This two-fold functionality redefines the relationship between humans and the boundless ocean of scientific literature.

On one hand, LLMs enable the extraction and synthesis of knowledge in an efficient, accessible manner. By deciphering and presenting complex scientific information, they drastically lower the barriers of entry into new fields. This ensures that even newcomers, who might otherwise be deterred by the intricacies of specialist literature, can navigate and comprehend scientific discourse. ChatPDF, for example, allows users to upload a PDF file (usually a scientific paper) and then ask the AI agent questions about this paper, as if a student seeking clarification at a professor's office hour.

On the other hand, LLMs can accelerate and refine the process of knowledge contribution. For instance, harnessing an LLM's capacity for multi-step inference and decision-making, researchers can navigate the labyrinthine expanse of scientific literature to identify the most pertinent papers. This doesn't merely expedite the literature review process; it also enhances the scientific paper's quality by ensuring a thorough and relevant survey of existing knowledge.

Furthermore, LLMs can play an instrumental role in the composition of scientific papers. By assisting in language production, they can serve as indispensable aids to scholars, particularly those for whom English is a second language. More than just linguistic assistance, LLMs can help structure logical narratives and ensure coherency, a function that is pivotal in scientific discourse, where complex ideas and findings must be meticulously organized and presented.

By functioning as both a distiller of existing knowledge and a facilitator of new knowledge creation, LLMs could turbocharge scientific progress. This dual role, encapsulating knowledge extraction and contribution, envisions LLMs as more than just tools - they could be collaborative partners, poised to reshape the knowledge landscape in an unprecedented way.

II. Evaluating and Amplifying LLMs' Scientific Proficiency

Let us also consider the fundamental compatibility between LLMs and the nature of human scientific knowledge. Our understanding of the universe is encoded in language, both natural and mathematical. The essence of science lies in its narrative, in the hypotheses posited, the methodologies explained, the results shared, and the conclusions drawn—all through the medium of language. This narrative nature of scientific knowledge makes it a fitting task for LLMs to handle.

Reflecting on the works of eminent thinkers such as David Hilbert, Kurt Gödel, and Alan Turing, one might draw parallels between their philosophical and theoretical explorations and the functioning of LLMs. Hilbert's pursuit of a complete and consistent system resonates with our aspirations for LLMs—to create a model that can fully and accurately represent our scientific knowledge. In essence, these formal proofs function as structured, mathematical narratives which are not dissimilar to natural language. Given this perspective, LLMs, trained extensively on natural language data, should theoretically possess a certain aptitude for grappling with scientific concepts. Yet, as Godel's Incompleteness Theorems suggest, no system can be both consistent and complete. Even with the best of our tools, some questions will remain beyond reach. Turing’s work on undecidable problems reinforces this, while also suggesting a provocative counterpoint – that LLMs might help identify such boundaries in our knowledge systems.

LLMs, while already exhibiting an impressive ability to handle scientific queries, require continuous assessment and improvement. To truly unlock the potential of LLMs in science, we must continuously refine their ability to comprehend, interpret, and generate scientific content. This includes a nuanced understanding of not just natural language, but also the languages of mathematics and logic that underpin scientific discourse. Herein lies a formidable challenge, but also an exhilarating opportunity. As we augment the proficiency of LLMs in the domain of science, we are essentially turbocharging our collective ability to investigate, understand, and expand the frontiers of knowledge.

An exciting step taken in this direction is the integration of WolframAlpha into ChatGPT. WolframAlpha, which incorporates the vast knowledge of Mathematica, will undoubtedly expand ChatGPT's ability to tackle complex science questions. As we stand at the cusp of an era where LLMs become an integral part of scientific research, it is imperative that we understand the transformative potential they hold.

III. One more thing ...

In the summer of 2023, prominent AI for Science pioneer DeepMind published an article on Nature, demonstrating that its AI agent, AlphaDev, used reinforcement learning to discover enhanced sorting algorithms – surpassing those honed by scientists and engineers over decades. Sorting is used by billions of people every day without them realising it. It underpins everything from ranking online search results and social posts to how data is processed on computers and phones, so an improvement at this level is of system significance.

As if people's minds are not blown enough, a mere few days later, Dimitris Papailiopoulos, associate professor at University of Wisconsin-Madison, announced on Twitter that he has successfully prompted GPT-4 to discover the same breakthrough Alphadev did. This stirred up a frenzy on the social media platform, eventually catching the attention of its eccentric billionaire owner, Elon Musk. The fact that two different AI were able to discover this new "science" makes it even more exciting as it demonstrates, perhaps the first time since the Enlightenment, a scalable path towards scientific discoveries.

One of the defining features of advanced large language models (LLMs), as is leveraged by Dr. Papailiopoulos in the previous example, is their capacity to mimic a "Chain of Thoughts" or the ability to engage in multi-step inference and decision-making. This powerful functionality mirrors a key attribute that scientists leverage during research: the ability to sequentially reason, make logical connections, and draw nuanced conclusions.

The "Chain of Thoughts" aspect of LLMs essentially allows them to move beyond single, isolated responses and engage in sustained interactions. For example, Auto-GPT can parse complex queries, remember past interactions, reason about context, and generate appropriate responses. This quality enables it to emulate the investigative process inherent to scientific work, thereby opening new frontiers of possibility.

The scientific method, rooted in empiricism and iterative learning, forms the philosophical backbone of scientific investigation. It consists of generating hypotheses, designing and conducting experiments, analyzing data, and then either confirming, refuting, or refining hypotheses based on the findings. Through their "Chain of Thoughts" capabilities, LLMs echo this systematic approach, being able to 'reason' through a problem, consider different facets, analyze, and generate informed responses.

An important caveat must be noted here. LLM can't genuinely hypothesize or question; they merely simulate these processes based on patterns gleaned from their training data. Despite this, LLMs' 'thought simulation' presents exciting possibilities. Imagine an LLM aiding in hypothesis generation or experimental design by drawing upon vast amounts of scientific literature to suggest novel combinations or perspectives. Or consider the role of an LLM as an indefatigable reviewer, combing through reams of data for patterns that might elude human scrutiny.

The implications are significant. The role of the scientist may evolve, as tasks that were once solely in the human domain become shared with advanced AI tools. This raises profound questions about the nature of scientific investigation and the human/AI partnership. How will the scientific method be adapted or expanded in this new paradigm? What checks and balances should be in place to ensure scientific rigor and integrity as we increasingly rely on these tools? And finally, how will the synergy between human and machine-led inquiry catalyze the next wave of scientific discovery?

These are exciting times. The advent of LLMs beckons us into an era of unprecedented scientific collaboration between humans and machines. As we grapple with these profound philosophical questions and implications, we are not merely spectators but active participants in defining the future of scientific exploration.